- Select Prime members can get Kindle Unlimited for 3 months at no cost - here's how

- Modernization means putting developers in the driver’s seat

- Get a free iPhone 16 Pro for free from T-Mobile, no trade in required - here's how

- The LG C4 OLED for $800 off is one of the best Prime Day TV deals right now

- Prime members can save $10 on any $20 or more Grubhub+ order for a limited time - here's how

Round-up of Nvidia GTC data center news

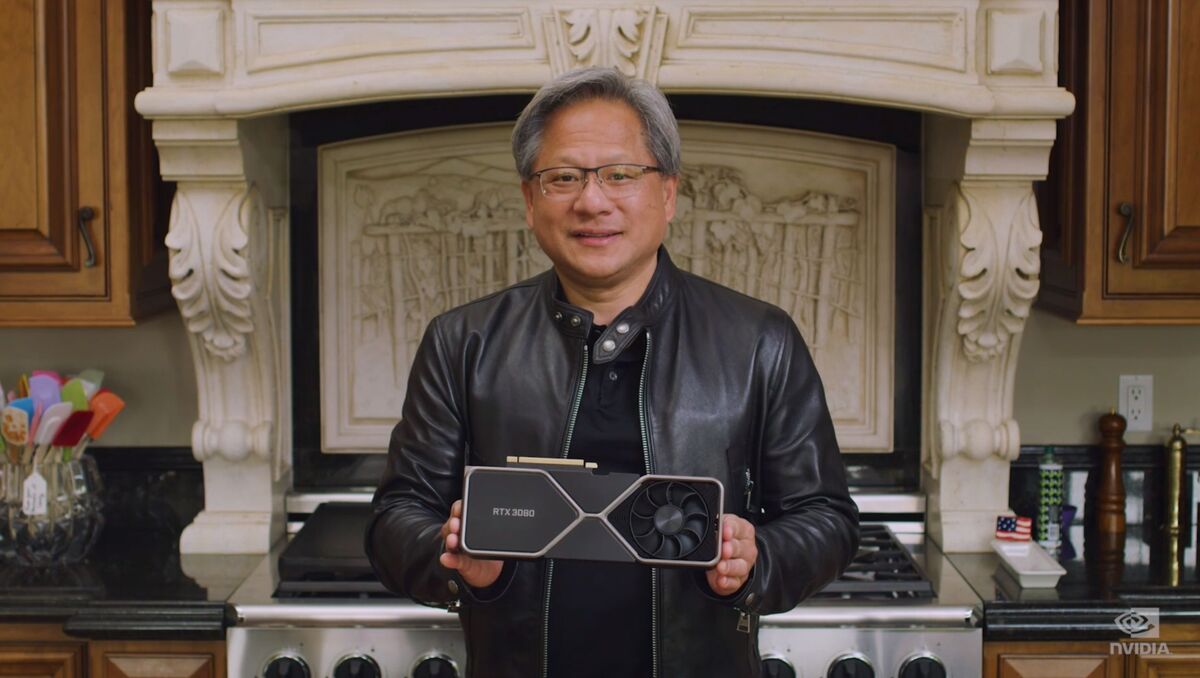

With a few dozen press releases and blog posts combined, no one can say that Nvidia’s GPU Technology Conference (GTC) is a low-key affair. Like last year’s show it is virtual, so many of the announcements are coming from CEO Jen-Hsun Huang’s kitchen.

Here is a rundown of the most pertinent announcements data-center folks will care about.

Two Ampere 100 offshoots

Nvidia’s flagship GPU is the Ampere A100, introduced last year. It is a powerful chip ideal for supercomputing, high-performance computing (HPC), and massive artificial intelligence (AI) projects, but it’s also overkill for some use cases and some wallets.

So at GTC the company introduced two smaller scale little brothers for its flagship A100, the A30 for mainstream AI and analytics servers, and the A10 for mixed compute and graphics workloads. Both are downsized from the bigger, more powerful, and more energy-consuming A100.

The A30, for instance, supports the range of math precision for AI as well as HPC workloads (Floating Point 64 down to INT4), and the multi-instance GPU (MIG) partitioning of the A100, but it offers half of the A100’s performance with less memory and fewer cores than the A100.

Nvidia’s A10 is also derived from the A100 but even lower end than the A30. For example, it does not support FP64, only FP32. It also has GDDR6 memory instead of the faster, more expensive HBM2 on the A100. It is meant for workloads like graphics, AI inference, and video encoding/decoding.

Nvidia has not set a release date for the two cards.

DGX For Lease

It only made sense that if every other hardware OEM had a lease option so should Nvidia. The company’s new cloud-native supercomputer, the Nvidia DGX Station A100, can now be leased for a short period of time and then returned when you’re done. The program is formally known as Nvidia Financial Solutions (NVFS).

It’s a sensible move. Individuals don’t have constant supercomputing needs like, say, Oak Ridge National Labs. In many instances, they only will need supercomputing power for a short period maybe a few times a year. So a multimillion dollar investment makes no sense for hardware that might sit unused.

Nvidia also announced the DGX SuperPod that will be available with Nvidia’s Bluefield-2 DPUs, enabling a cloud-native supercomputer. A DGX SuperPod consists of a bunch of individual DGX Station computers in one 4U rack mountable form factor. It’s a full bare-metal supercomputer, so you provide the operating environment, and also sharable.

Chip Roadmap

You know Nvidia is not sitting still on development. Huang laid out a roadmap of upcoming chips, and it’s ambitious. Between now and 2025, Nvidia plans to release six generations worth of hardware: two each for Ampere, Grace, and Bluefield.

“Ampere Next” is the codename for the next generation GPU, planned for 2022 release. There is speculation that the product name will be Hopper, continuing the tradition of naming GPU architectures after computing pioneers. Paired with the new Grace CPU, that means a Nvidia system of Arm and GPU will be Grace Hopper, the legendary Navy computing pioneer. “Ampere Next Next” will debut in 2024 or 2025.

Grace is due in 2023 and “Grace Next” will hit in 2025, while Nvidia’s Bluefield Data Processing Unit (DPU) will also see two new iterations; Bluefield-3 in 2022 and Bluefield-4 in 2024. Nvidia is making some huge claims on performance. It says Bluefield-3 will be 10 times faster than Bluefield-2, and Bluefield-4 could bring as much as a 100x performance boost.

The BlueField-3 DPU, which combines an Arm CPU with a network processor to offload networking processing from the CPUs, comes with 400Gbps links and five times the Arm compute power of the current DPU. Nvidia says one BlueField-3 DPU delivers the equivalent data-center services that could consume up to 300 x86 CPU cores.

Using Arm technology

While Nvidia works out the kinks in its planned acquisition of Arm Holdings, it is making major efforts to boost Arm across the board. First, it announced it will provide GPU acceleration for Amazon Web Services’ Graviton2, AWS’s own Arm-based processor. The accelerated Graviton2 instances will provide rich game-streaming experiences and lower the cost of powerful AI inference capabilities.

On the client side, Nvidia is working with MediaTek, the world’s largest supplier of smartphone chips, to create a new class of notebooks powered by an Arm-based CPU alongside an Nvidia RTX GPU. The notebooks will use Arm cores and Nvidia graphics to give consumers energy-efficient portables with “no-compromise” media capabilities based on a reference platform that supports Chromium, Linux, and Nvidia SDKs.

And in edge computing, NVIDIA is working with Marvell Semiconductor to team its OCTEON Arm-based processors with Nvidia’s GPUs. Together they will speed up AI workloads for network optimization and security.

And that’s just the data-center news.

Copyright © 2021 IDG Communications, Inc.