- Multicloud explained: Why it pays to diversify your cloud strategy

- Brits Lose £106m to Romance Fraud in a Year

- Why the Google Pixel Tablet is still my smart home display after a year (and it's on sale)

- Finally, a 16-inch Windows laptop I wouldn't mind putting my MacBook Pro away for

- How Human Behavior Can Strengthen Healthcare Cybersecurity

#RSAC: Bruce Schneier Warns of the Coming AI Hackers

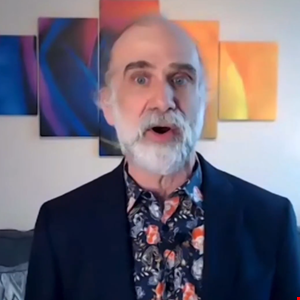

Artificial intelligence, commonly referred to as AI, represents both a risk and a benefit to the security of society, according to Bruce Schneier, security technologist, researcher, and lecturer at Harvard Kennedy School.

Schneier made his remarks about the risks of AI in an afternoon keynote session at the 2021 RSA Conference on May 17. Hacking for Schneier isn’t an action that is evil by definition; rather, it’s about subverting a system or a set of rules in a way that is unanticipated or unwanted by a system’s designers.

“All systems of rules can be hacked,” Schneier said. “Even the best-thought-out sets of rules will be incomplete or inconsistent, you’ll have ambiguities and things that designers haven’t thought of, and as long as there are people who want to subvert the goals in a system, there will be hacks.”

Hacking AI and the Explainability Problem

Schneier highlighted a key challenge with hacking that is conducted by some form of AI: it might be difficult to detect. Even if the hack is detected, it will be difficult to understand what exactly happened.

The so-called explainability problem is one that has been tackled in the popular cult classic science fiction novel The Hitchhiker’s Guide to the Galaxy. Schneier recounted that in that novel a race of hyper-intelligent pan-dimensional beings build the universe’s most powerful computer, called Deep Thought, to answer the ultimate question of life, the universe, and everything. The answer was 42.

“Deep Thought was unable to explain its answer, or even what the question was, and that is the explainability problem,” Schneier said. “Modern AIs are essentially black boxes: data goes one in one end, and the answer comes out the other.”

Schneier noted that researchers are working on explainable AI, but he doesn’t expect it to yield any short-term results for several reasons. In his view, explanations of how AI works are actually a cognitive shorthand used by humans, suited for the way humans make decisions.

“Forcing an AI to produce a human-understandable explanation is an additional constraint, and it could affect the quality of its decisions,” he said. “Certainly in the near term AI is becoming more opaque, less explainable.

AI Hackers on the Horizon

At present, Schneier doesn’t see the mass application of AI for malicious hacking activities by threat adversaries, though that is a possible future that organizations should start to prepare against.

“While a world filled with AI hackers is still science fiction, it’s not stupid science fiction,” Schneier said.

To date, Schneier has observed that malicious hacking has been an exclusively human activity, as searching for new hacks requires expertise, creativity, time, and luck. When AI systems are able to conduct malicious hacking activities, he warned, they will operate at a speed and scale no human could ever achieve.

“As AI systems get more capable, society will cede more and more important decisions to them, which means that hacks of those systems will become more damaging,” he said. “These hacks will be perpetrated by the powerful against us.”

Defending Against AI Hackers

While the first part of Schneier’s talk was a grim and sobering sermon on the risks of AI hacking, there are potential upsides for cybersecurity as well.

“When AI is able to discover new software vulnerabilities in computer code, it will be an incredible boon to hackers everywhere,” Schneier said. “But that same technology will be useful for defense as well.”

A potential future AI tool could be deployed by a software vendor to find software vulnerabilities in its own code and automatically provide a fix. It’s a potential future that could eliminate software vulnerabilities as we know them today, Schneier stated optimistically.

“While it’s easy to let technology lead us into the future, we’re much better off if we as a society decide what technology’s role in our future should be,” Schneier concluded. “This is something we need to figure out now before these AIs come online and start hacking our world.”