- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

Nvidia announces HPC and edge reference designs, liquid cooling plans

Nvidia unveiled high-performance computing (HPC) reference designs and new water-cooling technology for its GPUs at the annual Computex tradeshow in Taipei, Taiwan.

The reference designs employ Nvidia’s forthcoming Grace CPU and Grace Hopper Superchips, due next year. Grace is an Arm-based CPU – Nvidia’s first for the server market. Hopper is Nvidia’s next generation of GPU processors.

There are two superchips: the Grace Superchip, which combines two Grace CPU dies connected with the chipmaker’s super high-speed NVLink-C2C interconnect tech; and the Grace Hopper Superchip, which features one Grace CPU connected to one Hopper GPU, also connected directly to the CPU by NVLink-C2C.

These are part of Nvidia’s HGX line for large HPC deployments, where compute density is the order of the day. They come in 1U and 2U designs with two HGX Grace-Hopper nodes or four HGX Grace nodes in a single chassis. All four come with BlueField-3 networking processors.

The four designs are:

- Nvidia HGX Grace Hopper systems for AI training, inference and HPC

- Nvidia HGX Grace systems for HPC and supercomputing with a CPU-only design

- Nvidia OVX systems for digital twins (virtual copies of physical objects) and collaboration workloads

- Nvidia CGX systems for cloud graphics and gaming

“These new reference designs will enable our ecosystem to rapidly productize the servers that are optimized for Nvidia-accelerated computing software stacks, and [they] can be qualified as a part of our Nvidia-certified systems lineup,” said Paresh Kharya, director of datacenter computing, in a conference call with journalists.

Nvidia already has six partner vendors lined up to release systems in the first half of next year: Asus, Foxconn, Gigabyte, QCT, Supermicro, and Wiwynn.

Liquid cooling for GPUs

On the cooling front, Nvidia is acknowledging what gamers have known for some time: Its GPUs run very hot, and water cooling is a viable alternative to air cooling.

Nvidia’s current air-cooled 3000 series GPU is up to three inches thick. The actual board with the GPU on it is only a few millimeters thick, with the heatsink and fan making up the difference and making them extremely unwieldy and difficult to insert in a tight space.

So Nvidia is taking a cue from hard-core gamers’ playbooks: It’s removing the heatsink and fan and replacing it with a much slimmer water block, similar to the water-cooling technology used on CPUs. Water is piped in, cools the water block over the processor, and then is pumped away to be cooled. (See an example of that here.)

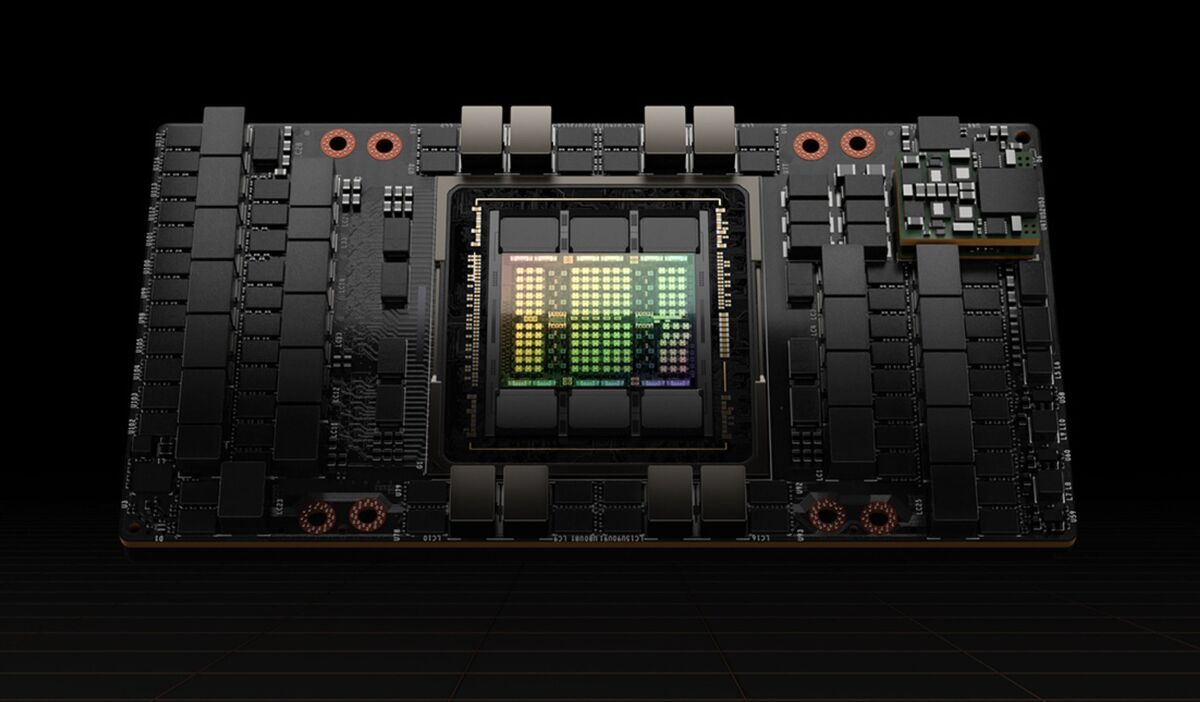

The result is a liquid-cooled PCIe card for Nvidia’s current flagship server GPU, the Ampere A100, that will be available in the third quarter of this year. Nvidia has a similar plan for the A100’s recently announced successor, the Hopper-powered H100, due next year. More than a dozen server makers are expected to support the liquid-cooled A100 PCIe card later this year, including ASUS, Gigabyte, Inspur, and Supermicro.

The water block is much slimmer than the heat sink, and that’s key. The heat sinks are so large they block the PCI Express slot right next to it. With the water block, a second card can be placed in the system.

“Liquid cooling has been around since the mainframe era, and it’s widely used today to cool the world’s fastest supercomputers. We typically support these deployments with our high-performance HDX platforms. However, now even the mainstream enterprise data centers are looking at options for liquid cooling in their data center infrastructures,” said Kharya.

Kharya claimed that liquid cooling will allow data centers to provide the same level of performance as data centers with air cooling on the same cards while consuming up to 30% less power, according to the results of recent tests Nvidia conducted with Equinix.

Jetson for the edge

The company also announced broader deployment of its low-power Jetson AGX Orin platform with systems from more than two dozen partner vendors targeted at edge and embedded applications. Jetson is a low-power edge design with AI processing in mind.

Jetson AGX Orin was announced earlier this year during Nvidia’s GTC show. It’s a developer kit with a single-board computer based on an Arm CPU with 12 Cortex-A78AE cores and an Ampere A100 GPU.

Nvidia claims 6,000 customers are building Jetson platforms, and to address the growing demand for them the company announced four new reference designs of varying memory configurations, available later this year.

Copyright © 2022 IDG Communications, Inc.