- I changed 6 settings on my Roku TV for a significant performance boost

- Asisa se apoya en la transformación digital para prestar un mejor servicio a los pacientes

- I accidentally battery-tested my favorite wireless earbuds - the results solidified my buying advice

- I replaced my AirPods for an unlikely alternative at work - here's why it's my new favorite

- Ending the great network depression

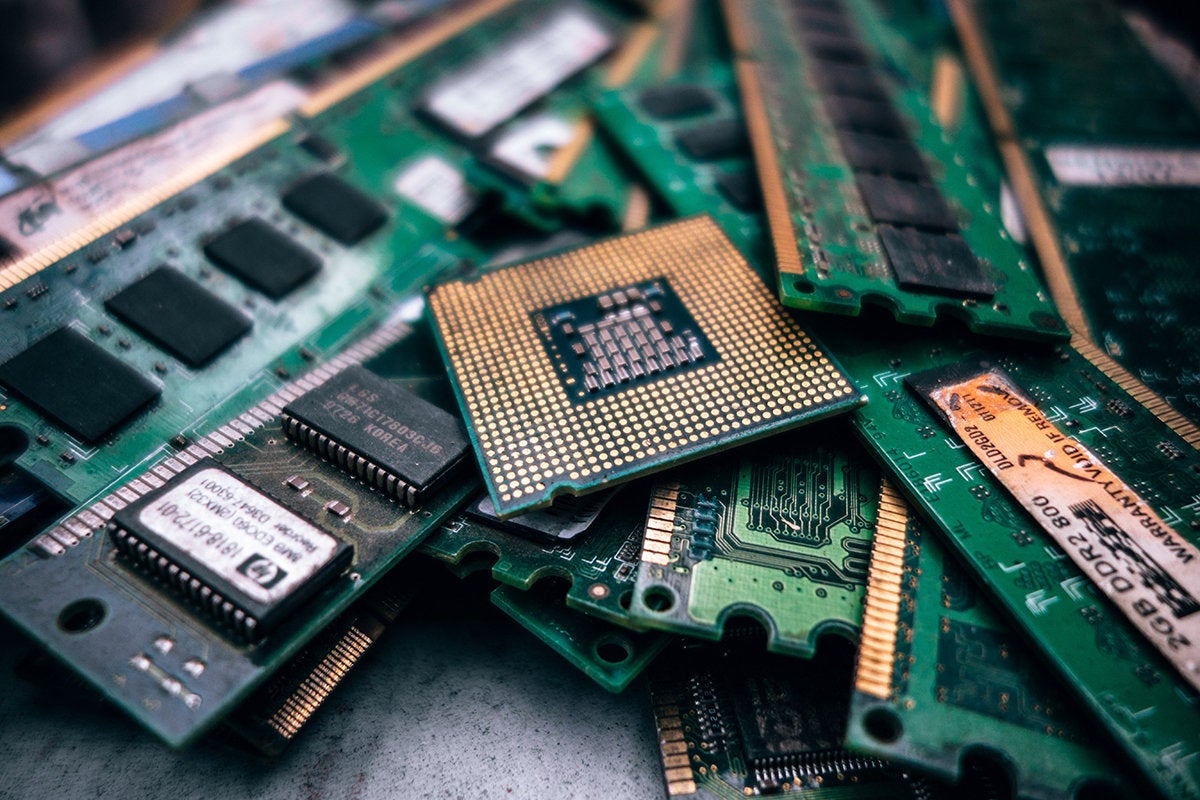

Samsung adds an AI processor to its High-Bandwidth memory to ease bottlenecks

A few months back, Samsung and Xilinx co-introduced an SSD with a Xilinx FPGA processor on-board, making computational storage very real. The SSD meant data could be processed where it resided rather than moving it to and from memory.

Now they’ve introduced High Bandwidth Memory (HBM) integrated with an artificial intelligence (AI) processor, called the HBM-PIM. The new processing-in-memory (PIM) architecture brings AI processing capabilities inside the memory rather than moving contents in and out to the processor, to accelerate large-scale processing in data centers, high-performance computing (HPC) systems and AI-enabled mobile applications.

HBM is a different kind of memory than DRAM. It is stacked vertically rather than spread out in a 2D design, so data moves less distnace through memory. As a result, HBM chips are smaller than DRAM but also more expensive, around 30% more than comparable capacity DRAM.

HBM is found on processors, CPUs and GPUs primarily, and usually sits physically right next to the processor chip to minimize travel. It’s found a home in HPC and AI applications, where money is less of an object than it is for the mass market.

Samsung notes that most of today’s computing systems are based on the von Neumann architecture, which uses separate processor and memory units to carry out data-processing tasks. That means moving data back and forth between the CPU and memory, through both the memory and CPU buses.

This sequential processing approach requires data to constantly move back and forth, resulting in a system-slowing bottleneck, especially when handling ever-increasing volumes of data. CPU vendors for years have attempted to compensate by making L1 through L3 caches larger, to the point they take up more space than the CPU core.

In contrast, HBM-PIM brings processing power directly to where the data is stored by placing a DRAM-optimized AI engine inside each memory bank—a storage sub-unit— enabling parallel processing and minimizing data movement.

When applied to Samsung’s current HBM2 memory called Aquabolt, the new architecture can deliver more than twice the system performance while reducing energy consumption by more than 70%. The HBM-PIM also does not require any hardware or software changes, allowing faster integration into existing systems.

“Our groundbreaking HBM-PIM is the industry’s first programmable PIM solution tailored for diverse AI-driven workloads such as HPC, training and inference. We plan to build upon this breakthrough by further collaborating with AI solution providers for even more advanced PIM-powered applications,” said Kwangil Park, senior vice president of memory-product planning at Samsung Electronics in a statement.

Samsung’s HBM-PIM is now being tested inside AI accelerators by leading AI solution partners, with all validations expected to be completed within the first half of this year.

Copyright © 2021 IDG Communications, Inc.