- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

Network automation to the rescue in the data center

Let me start by saying it’s widely known that data centers benefit from being highly automated. Automated scripts can complete whatever task they are given much faster than a human and do it consistently, with fewer errors.

The data center network is the connecting fabric of all the workloads in a data center. Initially, network switches received extensive functionality to give them as much intelligence as possible, but today’s networking requirements have moved beyond what a box-by-box configuration solution can offer.

Deploying spine/leaf networks involves quite a bit of complexity. At the top of every data center rack is a pair of switches. The top of rack switch pairs need to be configured to support bonded connections from workload infrastructure. VSX, a switch virtualization technology developed by HPE Aruba Networking, virtualizes the two switches into a single layer 2 device. This allows for server or storage arrays to have a single connection to each switch in the top of rack pair. In a 12-rack data center, that would require configuring 24 different switches from the command line. Sounds like ‘low hanging fruit’ for automation.

Aruba

Upon finishing the top of rack switch configuration, the next task will be using OSPF to build the underlay network. This makes each switch’s loopback, or internal IP address, ‘reachable’ from all others. If data center operators have deployed VMware NSX, or you are designing for AI workloads, that would be the end of it. If not, and layer 2 extension over layer 3 is required, then there is lots more to do. The overlay network will need to be configured to build VXLAN tunnels.

Multiprotocol BGP is used with EVPN which enables automatic tunnel instantiation between matching Virtual Network Identifiers or VNIs. VNIs are mapped to Layer 2 VLANs and when the VXLAN tunnels are established, every device on a matching VLAN will have access to each other. Additionally, symmetrical integrated route bridge (IRB) will also need to be configured if you want inter-VLAN communication.

This sounds like a lot of things to keep track of, but we are not finished yet! There is also Network Time Protocol (NTP) and Domain Naming Services (DNS).

Did I mention deploying security across all this yet?

I think by now the picture is starting to solidify. This whole process needs to be automated. No single network device can complete this task on its own. It will take several different solutions, linked together, just like the applications in a CI/CD pipeline. A system that is fully automated and programmable and scalable.

Aruba

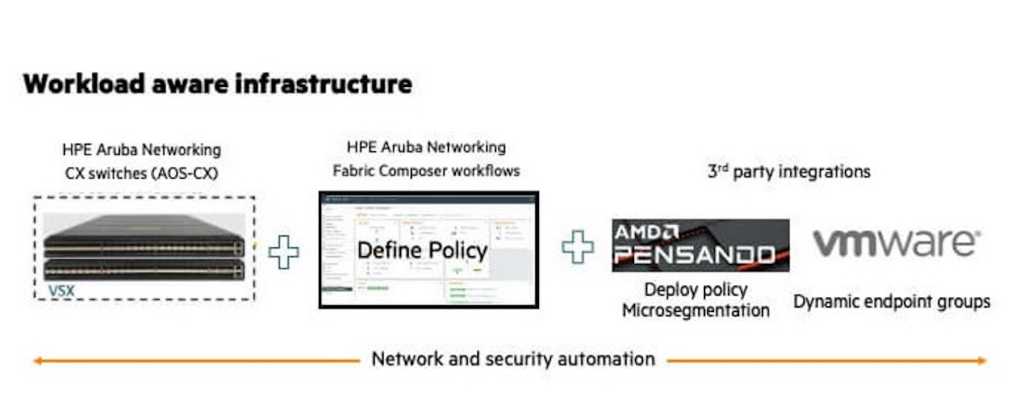

In this blog series, I will discuss in detail, all the elements of the HPE Aruba Networking data center solution, consisting of the HPE Aruba Networking CX 10000 Switch Series, HPE Aruba Fabric Composer, and third-party, built-in integrations like AMD Pensando and VMware vSphere, to name a few.

Next up will be a review of the CX 10000 switch and its capabilities. The CX 10000 is a true fourth-generation, layer 3 switch. What makes it fourth generation is it has two programmable P4 chips on the motherboard, each with 400G bandwidth to the Trident chipset.

Who wouldn’t want 800G of STATEFUL firewall in every top of rack switch, and a convenient way to push policy to them?

More on that in the next chapter! Stay tuned!

Not an HPE Aruba Networking Airhead? Please register to access additional data center networking technical content:

More resources: