- Learn with Cisco at Cisco Live 2025 in San Diego

- This Eufy robot vacuum has a built-in handheld vac - and just hit its lowest price

- I highly recommend this Lenovo laptop, and it's nearly 50% off

- Disney+ and Hulu now offer prizes, freebies, and other perks to keep you subscribed

- This new YouTube Shorts feature lets you circle to search videos more easily

Tech Policy Expert Calls for Law Overhaul to Combat Deepfakes

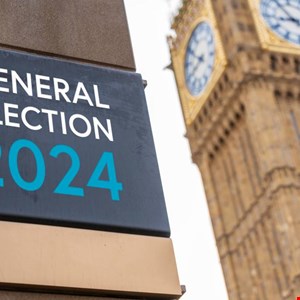

Three weeks before the UK general election, Matthew Feeney, head of tech and innovation at the UK-based Centre for Policy Studies, warned about the deepfake threat to election integrity in a new report.

The tech policy expert said that technological advances have made deepfakes easier and cheaper than ever to produce.

However, he cautioned against the inevitable kneejerk reaction to such technology, citing the precedent of other recent attempts to regulate new technologies.

In Facing Fakes: How Politics and Politicians Can Respond to the Deepfake Age, Feeney recommends that the UK government update existing laws instead of creating new ‘AI/deepfake regulations.’

He argued that governments should “police the content rather than the technology used to create it.”

He also advised the UK government to build on the AI Safety Summits and the work of the AI Safety Institute to set up a deepfake taskforce, sponsor further deepfake detection contests, and support the development of watermarking technologies.

UK’s First Deepfake General Election, But Not the Last

In the report, Feeney claimed: “We are in the midst of the UK’s first deepfake general election. Although the election campaigns are only a few weeks old, deepfake or other AI-generated content is already spreading rapidly.”

While some examples are harmless because the synthetic content is obviously fake, others are much more believable – and thus pose a risk.

The tech policy expert cited a video purporting to show Wes Streeting, Labour’s Shadow Health Secretary, calling Diane Abbott a ‘silly woman’ during an appearance on current affairs TV show Politics Live and another that appeared to show Labour North Durham candidate Luke Akehurst using crude language to mock constituents.

He added: “Unfortunately for lawmakers, the nature of social media, the state of deepfake detection tools, the low cost of deepfake creation, and the limited reach of British law mean that we should expect harmful deepfake content to proliferate regardless of how Parliament acts. The current general election might be the UK’s first deepfake general election, but it will not be the last.”

He also believes that, although private sector-led deepfake mitigation initiatives, such as watermarking content solutions, should be supported, they will probably fail to solve deepfake-powered foreign interference.

Tackling the Disinformation Risk While Preserving Tech Opportunities

Despite the risks AI deepfakes and AI pose, Feeney argued against outright bans of AI or deepfake technologies, such as those supported by some policy campaigns like ControlAI and Ban Deepfakes.

“Deepfakes have many valuable uses, which risk being undermined by legislation or regulation. Many content-creation and alteration technologies such as the printing press, radio, photography, film editing, CGI, etc. pose risks, but lawmakers have resisted bans on these technologies because of these risks criticized many of the existing and upcoming AI regulations,” he wrote.

Additionally, Feeney argued that to ban deepfakes, regulators must define what the term encompasses – which is a significant challenge.

Read more: Meta’s Oversight Board Urges a Policy Change After a Fake Biden Video

Instead, the tech policy expert suggested that governments focus on the content rather than the technology.

He proposed that governments team up with the private sector and academia to address deepfake risks at two levels:

- At the operational, by setting up public-private taskforces, building on the work done by the AI Safety Institute

- At the legislative level, by updating existing laws sanctioning false claims, hate speech, harassment, blackmail, fraud and other harmful behaviors