- The Significance of Cybersecurity within AI Governance

- The Evolution of SOC: Harnessing Data, AI and Automation

- How to disable ACR on your TV (and stop companies from spying on you)

- I expected this cheap multitool to be a waste of money, but it's my new a toolbox essential

- Have The Last Word Against Ransomware with Immutable Backup

Getting Started with the Labs AI Tools for Devs Docker Desktop Extension | Docker

This ongoing Docker Labs GenAI series explores the exciting space of AI developer tools. At Docker, we believe there is a vast scope to explore, openly and without the hype. We will share our explorations and collaborate with the developer community in real-time. Although developers have adopted autocomplete tooling like GitHub Copilot and use chat, there is significant potential for AI tools to assist with more specific tasks and interfaces throughout the entire software lifecycle. Therefore, our exploration will be broad. We will be releasing software as open source so you can play, explore, and hack with us, too.

We’ve released a simple way to run AI tools in Docker Desktop. With the Labs AI Tools for Devs Docker Desktop extension, people who want a simple way to run prompts can easily get started.

If you’re a prompt author, this approach also allows you to build, run, and share your prompts more easily. Here’s how you can get started.

Get the extension

You can download the extension from Docker Hub. Once it’s installed, enter an OpenAI key.

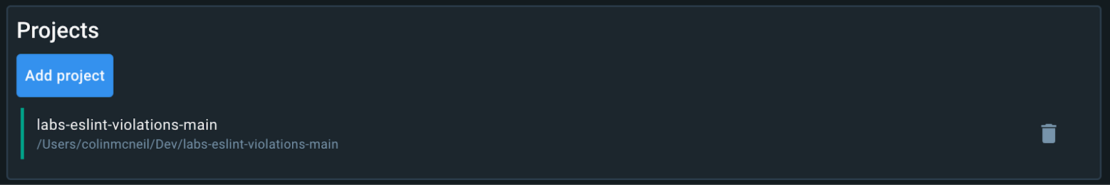

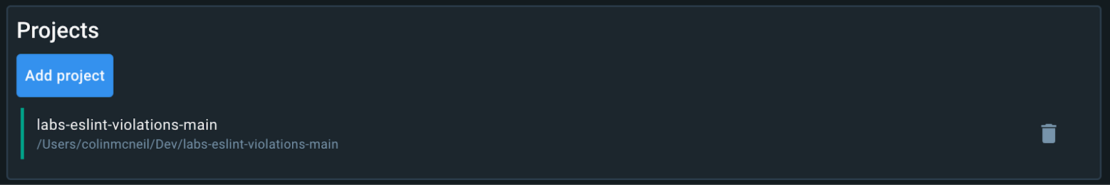

Import a project

With our approach, the information a prompt needs should be extractable from a project. Add projects here that you want to run SDLC tools inside (Figure 1).

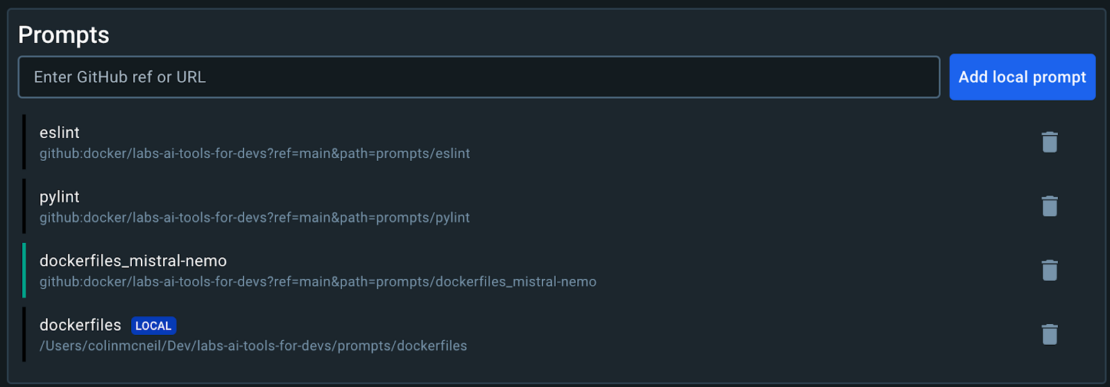

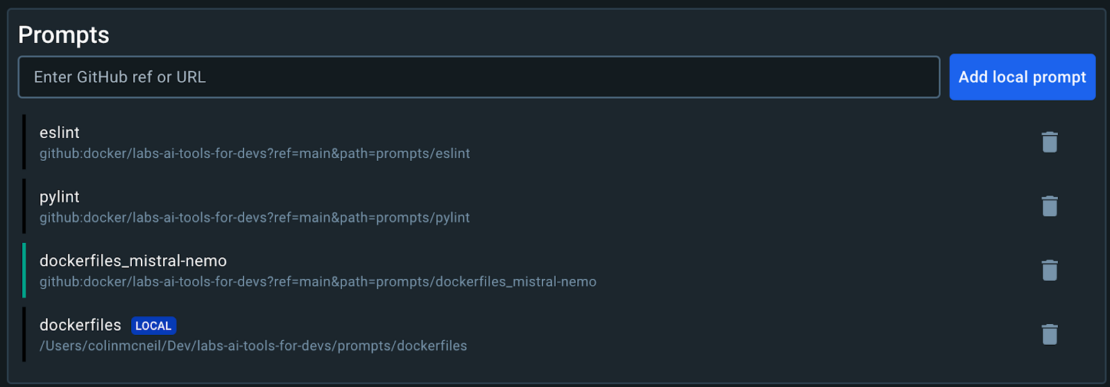

Inputting prompts

A prompt can be a git ref or a git URL, which will convert to a ref. You can also import your own local prompt files, which allows you to quickly iterate on building custom prompts.

Sample prompts

(copy + paste the ref)

Writing and testing your own prompt

Create a prompt file

A prompt file is a markdown file. Here’s an example: prompt.md

# prompt system

You are an assistant who can write comedic monologs in the style of Stephen Colbert.

# prompt user

Tell me about my project.Now, we need to add information about the project. Doing so is done with mustache templates:

# prompt system

You are an assistant who can write comedic monologues in the style of Stephen Colbert.

# prompt user

Tell me about my project.

My project uses the following languages:

{{project.languages}}

My project has the following files:

{{project.files}}Leverage tools in your project

Just like extractors, which can be used to render prompts, we define tools in the form of Docker images. A function image follows the same spec as extractors but in reverse.

- The Docker image is automatically bind-mounted to the project.

- The Docker image entry point is automatically run within the project using

–workdir. - The first argument will be a JSON payload. This payload is generated when the LLM tries to call our function.

- name: write_files

description: Write a set of files to my project

parameters:

type: object

properties:

files:

type: array

items:

type: object

properties:

path:

type: string

description: the relative path to the file that should be written

content:

type: string

description: the content that should be written to a file

executable:

type: boolean

description: whether to make the file executable

container:

image: vonwig/function_write_files:latestTest your prompt

- Add the file to a Git repository and push to a public remote.

- Paste the URL to reference the file on GitHub.

Alternatively, import a local prompt and select the file on your computer.

3. Run.

## ROLE assistant

Don't even get me started on the files, I mean, have you ever sat down and really looked at a list of files? This project has got more layers than that seven-layer bean dip I had at last weekend's potluck. This project isn't just files on files, its files within files, its dot something after dot something else – and before you ask: Yes, all of these are REQUIRED!

Coming down to Dockerfile. Now, I've seen some Dockerfiles but our Dockerfile, folks, it's something else. It lifts, it grinds, it effectively orchestrates our code like a veteran conductor at the symphony. We also have multiple templates because who doesn't love a good template, right?

Oh, and did I mention the walkthroughs and the resources? Let's just say this isn't a "teach a man to fish" situation. This is more of a “teach a man to create an entire fishing corporation” scenario. Now THAT'S dedication.

Finally we've got the main.js, and let's be real, is it even a project without a main.js anymore?As always, feel free to follow along in our new public repo. Everything we’ve discussed in this blog post is available for you to try out on your own projects.

For more on what we’re doing at Docker, subscribe to our newsletter.