- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

Improve File Operations Speeds by 2-10x with Synchronized File Shares in Docker Desktop | Docker

We are happy to announce that Mutagen’s file-sharing technology, acquired by Docker, has been seamlessly integrated into Docker Desktop, and the synchronized file shares feature is available now in Docker Desktop. This enhancement brings fast and flexible host-to-VM file sharing, offering a performance boost for developers dealing with extensive codebases.

Synchronized file shares overcome the limitations of traditional bind mounts, providing native file system performance, so developers can enjoy 2-10x faster file operation speeds. Simply log in to Docker Desktop with your subscription account (Docker Pro, Teams, or Business) to experience this new time-saving feature.

Improving the developer experience

Synchronized file shares transform the backend developer experience by increasing developer productivity with the time saved compared to traditional file-sharing systems. Synchronized file sharing is ideal for developers who:

- Manage large repositories or monorepos with more than 100,000 files, totaling significant storage.

- Utilize virtual file systems (such as VirtioFS, gRPC FUSE, or osxfs) and face scalability issues with their workflows.

- Encounter performance limitations and want a seamless file-sharing solution without worrying about ownership conflicts.

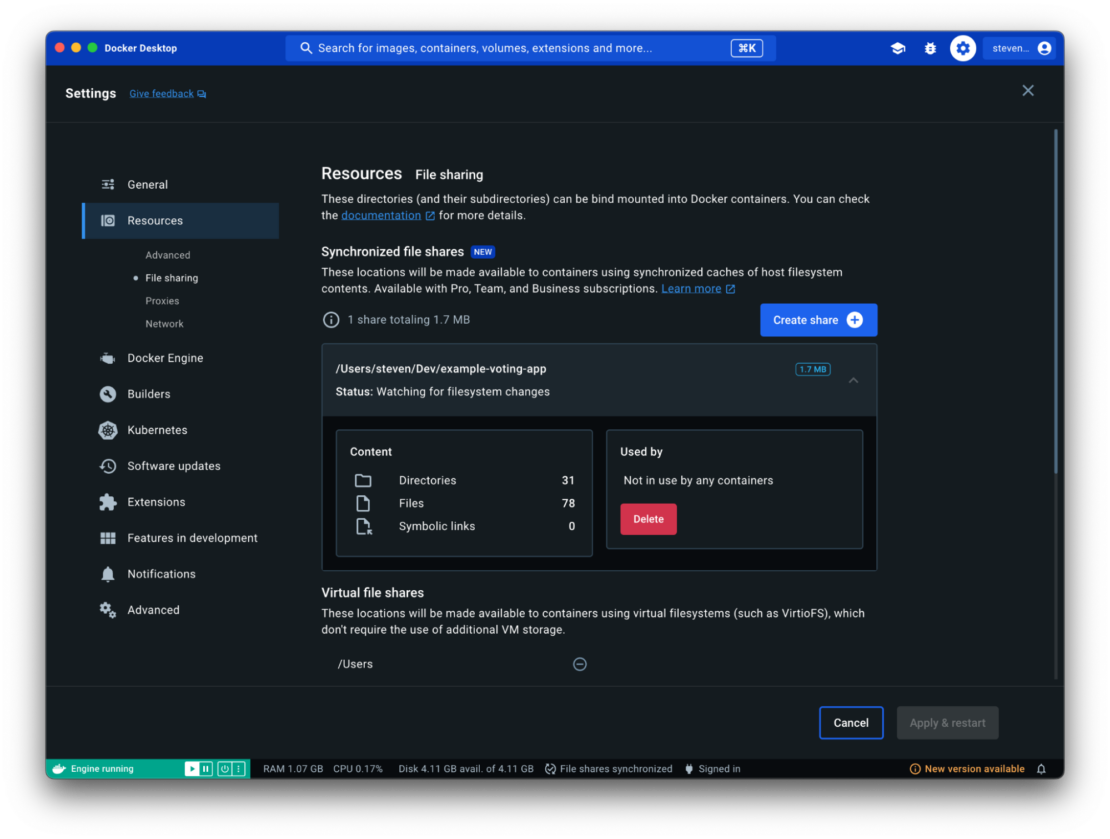

To get started, go to Settings and navigate to the File sharing tab within the Resources section (Figure 1). You can learn more about the functionality and how to use it in our documentation.

How Docker solves the problem

Using synchronized file system caches to improve bind mount performance isn’t a new idea, but this functionality has never been available to developers as an ergonomic first-party solution. With Docker’s acquisition of Mutagen, we’re now in a position to offer an easy-to-use and transparent mechanism with potentially order-of-magnitude improvements to developer workflows.

Bind mounts are the mechanism that Linux uses to make files (like code, scripts, and images) available to containers. They’re what you get when you specify a host path to the -v/--volume flag in docker run or docker create commands (or a host path under volumes: in Compose). If folders are bind-mounted in read/write mode (the default), they also allow containers to write back to the host file system, which is great for getting files (like build products) out of containers.

When using containers natively on Linux, for example with Docker Engine, this functionality is enabled by the Linux kernel and comes with no performance impact. When using a cross-platform solution like Docker Desktop, the necessity of virtualization means that an additional file-sharing mechanism between the host system, and the Linux VM is required to enable bind mounts.

Historically, Docker has used a number of virtual file system solutions to enable this host/VM file sharing, with different solutions available based on the host platform. The most recent of these mechanisms, VirtioFS, provides an excellent out-of-the-box file-sharing solution for most developers and projects, and we’re continuing to invest in further performance improvements. These virtual file systems operate by running a file server on the host, providing files on demand via FUSE-backed file systems within the VM.

Although virtual file systems work great for most cases, there are projects where additional performance is required. In cases where a project contains many thousands (or even millions) of files totaling hundreds of megabytes or gigabytes, the demanding system calls used by development tools can lead to extremely slow behavior.

Your project might fall into this category even if it contains only a single file — look at the staggering tree of dependencies that modern frameworks bring into your node_modules directory, for example. Modern developer tools like compilers, dynamic language runtimes, and package managers love to traverse file systems, issuing thousands or millions of readdir(), stat(), and open()/read()/write()/close() calls. With virtual file systems, each of these system calls has to be sent across the host/VM boundary (in addition to incurring the standard round trips between kernel space and user space within the Linux VM when using the FUSE stack).

Using synchronized file shares

This is where synchronized file shares come into play. With synchronized file shares, developers can create ext4-backed caches of host file system locations inside the Docker Desktop VM. This means all those expensive file system calls are now handled directly by the Linux kernel on a native file system. These caches are kept in sync with the host file system using the Mutagen file synchronization engine, so the files are propagated bidirectionally with ultra-low latency. For most developers, there should be no perceptible difference in the file-sharing experience, other than improved performance!

So what’s the trade-off? Well, you’ll pay to store the files twice (the originals on the host and the cache inside the VM). Given the relatively low cost of disk space, compared with the high cost of developer time, this trade-off is usually a no-brainer.

To keep you in control of what gets synced, we’ve made synchronized file shares a granular, opt-in experience (we don’t want to sync your entire hard drive by default). We’ve worked hard to make this step as easy as possible — select Create share in the File sharing settings pane and choose the location you want.

The opt-in nature of synchronized file shares also makes it easy to adopt either gradually or selectively — there’s no need to impose changes on your entire team. Any bind mount that can’t be provided by synchronized file shares’ caches will fall back to your default virtual file-sharing mechanism, meaning there’s no change to your existing workflows. Team members can opt-in to synchronized file shares as necessary, using the functionality as a strategic optimization for specific parts of a codebase.

Conclusion

We’re excited about this latest time-saving feature and what it means to you — freeing up time, increasing productivity, and enabling a focus on innovation. Docker Desktop continues investing in modernizing the developer experience, and synchronized file shares is the latest enhancement.