- Cyber as a Pressure Valve: Why Economic Conflict Is Fueling a New Era of Cyber Escalation

- “2025년 스테이블코인 투자 2024년 대비 10배 예상”···CB인사이츠, ‘스테이블코인 시장 지도’ 공개

- The best portable power stations for camping in 2025: Expert tested and reviewed

- "제조 업계, 스마트 기술 전환 중··· 95%가 AI 투자 예정" 로크웰 오토메이션

- What to Do If You Book a Hotel or Airbnb and It Turns Out to Be a Scam | McAfee Blog

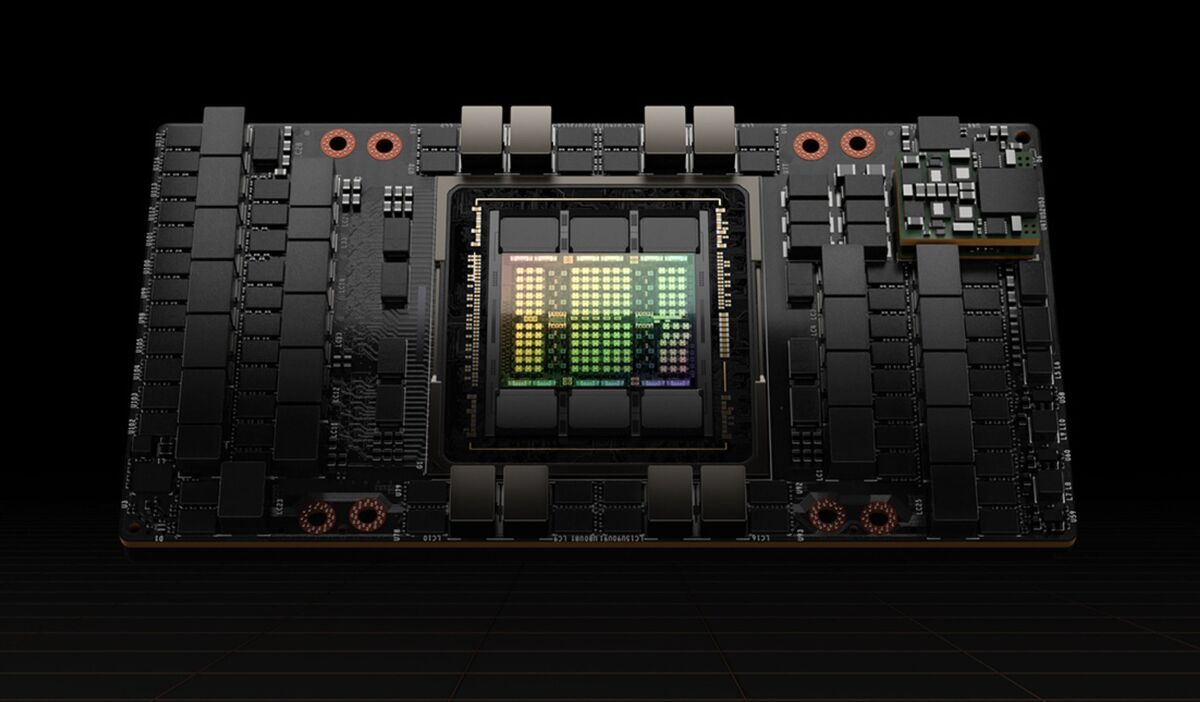

Inside Nvidia’s new AI supercomputer

With Nvidia’s Arm-based Grace processor at its core, the company has introduced a supercomputer designed to perform AI processing powered by a CPU/GPU combination.

The new system, formally introduced at the Computex tech conference in Taipei the DGX GH200 supercomputer is powered by 256 Grace Hopper Superchips, technology that is a combination of Nvidia’s Grace CPU, a 72-core Arm processor designed for high-performance computing and the Hopper GPU. The two are connected by Nvidia’s proprietary NVLink-C2C high-speed interconnect.

The DGX GH200 features a massive shared memory space of more than 144TB of HBM3 memory connected by its NVLink-C2C interconnect technology. The system is a simplified design, and its processors are seen by thier software as as one giant GPU with one giant memory pool, said Ian Buck, vice president and general manager of Nvidia’s hyperscale and HPC business unit.

He said the system can be deployed and trained with Nvidia’s help in AI models that can require memory beyond the bounds of what a single GPU supports. “We need a completely new system architecture that can break through one terabyte of memory in order to train these giant models,” he said.

Nvidia claims an exaFLOP of performance, but that’s from eight-bit FP8 processing. Now the majority of AI processing is being done using 16-bit Bfloat16 instructions, which would take twice as long. One way of looking at it is you could have a supercomputer that ranks in the top 10 of the TOP500 supercomputer list and occupy a comparatively modest space.

By using NVLink instead of standard PCI Express interconnects, the bandwidth between GPU and CPU is seven times faster and requires a fifth of the interconnect power.

Google Cloud, Meta, and Microsoft are among the first expected to gain access to the DGX GH200 to explore its capabilities for generative AI workloads. Nvidia also intends to provide the DGX GH200 design as a blueprint to cloud service providers and other hyperscalers so they can further customize it for their infrastructure. Nvidia DGX GH200 supercomputers are expected to be available by the end of the year.

Software is included.

These supercomputers come with Nvidia software installed to provide a turnkey product that includes Nvidia AI Enterprise, the primary software layer for its AI platform featuring frameworks, pretrained models, and development tools; and Base Command for enterprise-level cluster management.

DGX GH200 is the first supercomputer to pair Grace Hopper Superchips with Nvidia’s NVLink Switch System, the interconnect that enables the GPUs in the system to work together as one. The previous generation system maxed out at eight GPUs working in tandem.

To get to the full-sized system still requires significant data-center real estate. Each 15 rack-unit chassis holds eight compute nodes, and there are two chassis per rack (or pod in Nvidia parlance) along with NVswitch ethernet and IP connectivity. Up to eight of the pods can be linked for up to 256 processors.

The system is air cooled despite the fact that Hopper GPUs draw 700 Watts of power, which means considerable heat. Nvidia said that it is internally developing liquid-cooled systems and is talking about it with customers and partners, but for now the DGX GH200 is cooled by fans.

So far, potental users of the system aren’t ready for liquid cooling, said Charlie Boyle, vice president of DGX systems at Nvidia. “There will be points in the future where we’ll have designs that have to be liquid cooled, but we were able to keep this one on air,” he said.

Nvidia announced at Computex that the Grace Hopper Superchip is in full production. Systems from OEM partners are expected to be delivered later this year.

Copyright © 2023 IDG Communications, Inc.