- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

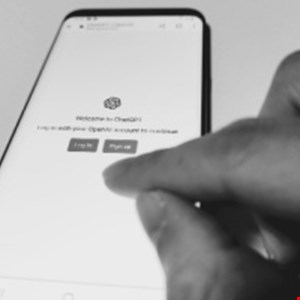

Phishing Sites Use ChatGPT as Lure

Security researchers have warned of several new Windows and Android phishing campaigns using ChatGPT to trick users into unwittingly downloading malware and handing over their credit card details.

Cybersecurity firm Cyble said that several of the phishing sites are being spread by a fake social media page spoofed in the name of ChatGPT developer OpenAI.

“The page seems to be trying to build credibility by including a mix of content, such as videos and other unrelated posts,” it said.

“However, a closer look revealed that some posts on the page contain links that lead users to phishing pages that impersonate ChatGPT. These phishing pages trick users into downloading malicious files onto their machines.”

These links are typosquatted to make the victim think they are being taken to an official ChatGPT site where they can download the much-talked about tool. In fact, they take the user to a site spoofed to appear like the real OpenAI website, which features a “Download for Windows” button.

Clicking on this will install stealer malware on the victim’s machine, Cyble said.

Another phishing site features a “Try ChatGPT” button which actually installs the Lumma stealer, while other variations are being used to spread the Aurora stealer variant, the Clipper Trojan and others.

A different phishing campaign again uses fake ChatGPT-related payment pages that are designed to steal victims’ money and credit card information, Cyble warned.

The security vendor also spotted 50 fake Android apps spoofing the ChatGPT brand in order to sneak potentially unwanted programs, adware and spyware onto victims’ devices, as well as commit billing fraud.

“By posing as ChatGPT, these threat actors seek to deceive users into thinking that they are interacting with a legitimate and trustworthy source when in reality, they are being exposed to harmful and malicious content,” Cyble concluded.

“Users who fall victim to these malicious campaigns could suffer financial losses or even compromise their personal information, causing significant harm.”

ChatGPT actually poses a double phishing threat: as well as fraudsters using it as a lure, security experts have previously warned that budding cyber-criminals could use the AI technology to generate convincing phishing campaigns en masse.