- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

Run LLMs Locally with Docker Model Runner | Docker

AI is quickly becoming a core part of modern applications, but running large language models (LLMs) locally can still be a pain. Between picking the right model, navigating hardware quirks, and optimizing for performance, it’s easy to get stuck before you even start building. At the same time, more and more developers want the flexibility to run LLMs locally for development, testing, or even offline use cases. That’s where Docker Model Runner comes in.

Now available in Beta with Docker Desktop 4.40 for macOS on Apple silicon, Model Runner makes it easy to pull, run, and experiment with LLMs on your local machine. No infrastructure headaches, no complicated setup. Here’s what Model Runner offers in our initial beta release.

- Local LLM inference powered by an integrated engine built on top of llama.cpp, exposed through an OpenAI-compatible API.

- GPU acceleration on Apple silicon by executing the inference engine directly as a host process.

- A growing collection of popular, usage-ready models packaged as standard OCI artifacts, making them easy to distribute and reuse across existing Container Registry infrastructure.

Keep reading to learn how to run LLMs locally on your own computer using Docker Model Runner!

Enabling Docker Model Runner for LLMs

Docker Model Runner is enabled by default and shipped as part of Docker Desktop 4.40 for macOS on Apple silicon hardware. However, in case you’ve disabled it, you can easily enable it through the CLI with a single command:

docker desktop enable model-runner

In its default configuration, the Model Runner will only be accessible through the Docker socket on the host, or to containers via the special model-runner.docker.internal endpoint. If you want to interact with it via TCP from a host process (maybe because you want to point some OpenAI SDK within your codebase straight to it), you can also enable it via CLI by specifying the intended port:

docker desktop enable model-runner --tcp 12434

A first look at the command line interfaceCLI

The Docker Model Runner CLI will feel very similar to working with containers, but there are also some caveats regarding the execution model, so let’s check it out. For this guide, I’m going to use a very small model to ensure it runs on hardware with limited resources and provides a fast and responsive user experience. To be more specific, we’ll use the SmolLM model, published by HuggingFace in 2024.

We’ll want to start by pulling a model. As with Docker Images, you can omit the specific tag, and it’ll default to latest. But for this example, let’s be specific:

docker model pull ai/smollm2:360M-Q4_K_M

Here I am pulling the SmolLM2 model with 360M parameters and 4-bit quantization. Tags for models distributed by Docker follow this scheme with regard to model metadata:

{model}:{parameters}-{quantization}

After having the model pulled, let’s give it a spin by asking it a question:

docker model run ai/smollm2:360M-Q4_K_M "Give me a fact about whales."

Whales are magnificent marine animals that have fascinated humans for centuries. They belong to the order Cetacea and have a unique body structure that allows them to swim and move around the ocean. Some species of whales, like the blue whale, can grow up to 100 feet (30 meters) long and weigh over 150 tons (140 metric tons) each. They are known for their powerful tails that propel them through the water, allowing them to dive deep to find food or escape predators.

Is this whale fact actually true? Honestly, I have no clue; I’m not a marine biologist. But it’s a fun example to illustrate a broader point: LLMs can sometimes generate inaccurate or unpredictable information. As with anything, especially smaller local models with a limited number of parameters or small quantization values, it’s important to verify what you’re getting back.

So what actually happened when we ran the docker model run command? It makes sense to have a closer look at the technical underpinnings, since it differs from what you might expect after using docker container run commands for years. In the case of the Model Runner, this command won’t spin up any kind of container. Instead, it’ll call an Inference Server API endpoint, hosted by the Model Runner through Docker Desktop, and provide an OpenAI compatible API. The Inference Server will use llama.cpp as the Inference Engine, running as a native host process, load the requested model on demand, and then perform the inference on the received request. Then, the model will stay in memory until another model is requested, or until a pre-defined inactivity timeout (currently 5 minutes) is reached.

That also means that there isn’t a need to perform a docker model run before interacting with a specific model from a host process or from within a container. Model Runner will transparently load the requested model on-demand, assuming it has been pulled beforehand and is locally available.

Speaking of interacting with models from other processes, let’s have a look at how to integrate with Model Runner from within your application code.

Having fun with GenAI development

Model Runner exposes an OpenAI endpoint under http://model-runner.docker.internal/engines/v1 for containers, and under http://localhost:12434/engines/v1 for host processes (assuming you have enabled TCP host access on default port 12434). You can use this endpoint to hook up any OpenAI-compatible clients or frameworks.

In this example, I’m using Java and LangChain4j. Since I develop and run my Java application directly on the host, all I have to do is configure the Model Runner OpenAI endpoint as the baseUrl and specify the model to use, following the Docker Model addressing scheme we’ve already seen in the CLI usage examples.

And that’s all there is to it, pretty straightforward, right?

Please note that the model has to be already locally present for this code to work.

OpenAiChatModel model = OpenAiChatModel.builder()

.baseUrl("http://localhost:12434/engines/v1")

.modelName("ai/smollm2:360M-Q4_K_M")

.build();

String answer = model.chat("Give me a fact about whales.");

System.out.println(answer);

Finding more models

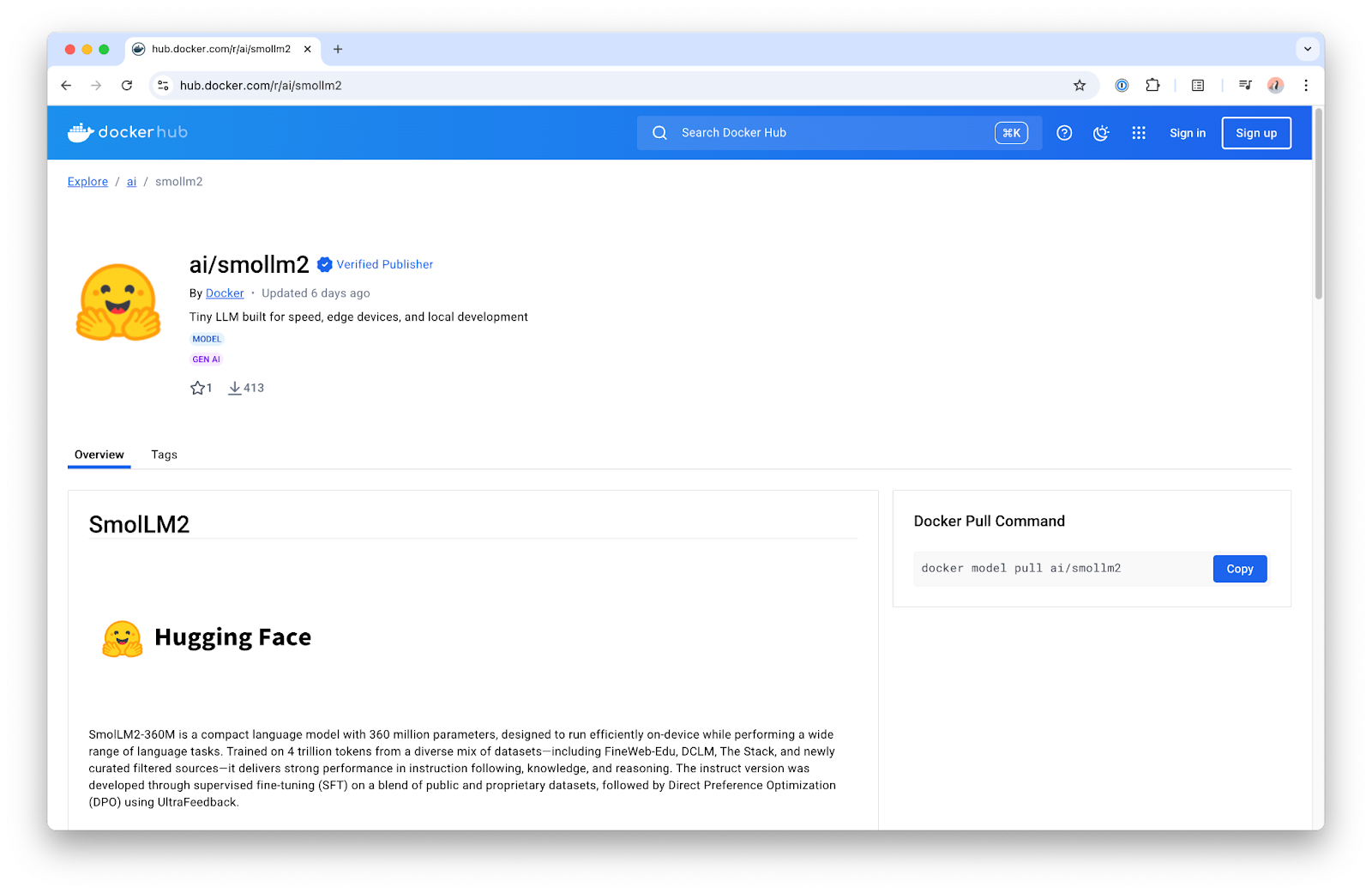

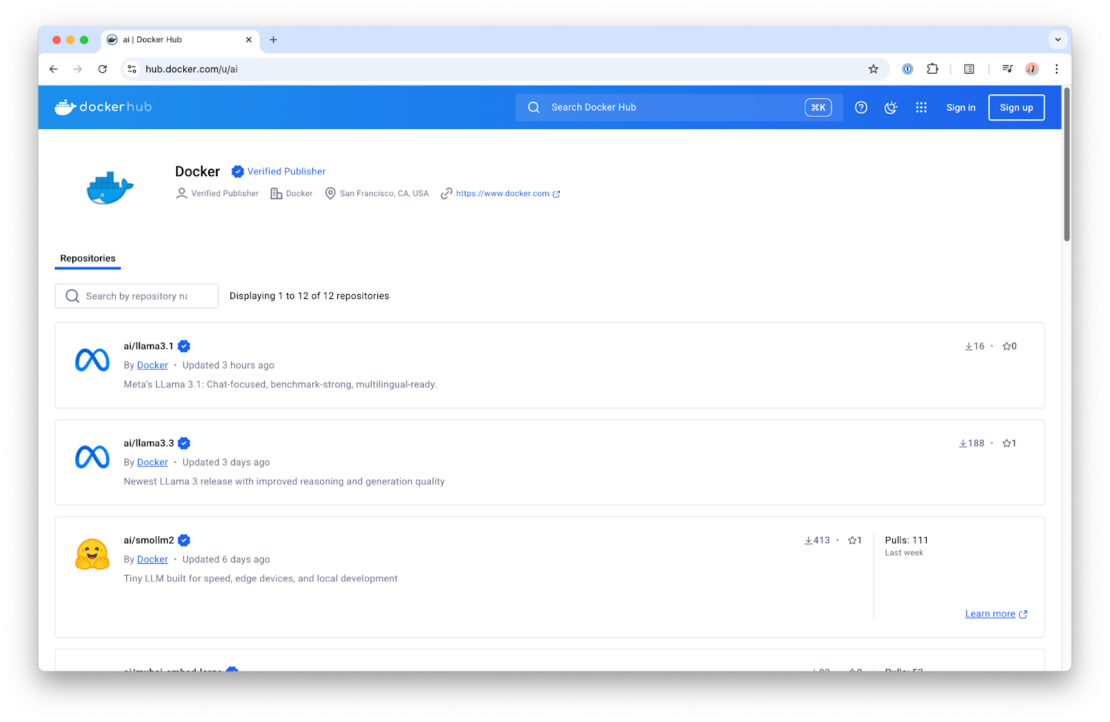

Now, you probably don’t just want to use SmolLM models, so you might wonder what other models are currently available to use with Model Runner.? The easiest way to get started is by checking out the ai/ namespace on Docker Hub.

On Docker Hub, you can find a curated list of the most popular models that are well-suited for local use cases. They’re offered in different flavors to suit different hardware and performance needs. You can also find more details about each in the model card, available on the overview page of the model repository.

What’s next?

Of course, this was just a first look at what Docker Model Runner can do. We have many more features in the works and can’t wait to see what you build with it. For hands-on guidance, check out our latest YouTube tutorial on running LLMs locally with Model Runner. And be sure to keep an eye on the blog, we’ll be sharing more updates, tips, and deep dives as we continue to expand Model Runner’s capabilities