- Will your old laptop still get security updates after this year? Check this chart

- Your Apple CarPlay is getting a big update: 3 useful features coming with iOS 26

- I changed 8 settings on my Motorola phone for an instant battery boost

- Your car's USB port is more useful than you think. 5 features you're missing out on

- Anubis Ransomware Adds File-Wiping Capability

The cost of (AI) business: Getting the most out your data center now – and in the future

Today’s CIOs face unprecedented challenges in modernizing their data centers to accommodate the rapidly rising demands on their infrastructures—while also facing increasingly constrained budgets. Generative AI (genAI) burst onto the scene in November 2022 with the release of ChatGPT, surprising almost everyone in the industry with its capabilities, and suddenly every CEO and board of directors began telling their CIOs to get genAI capabilities online as fast as possible.

It’s common knowledge that genAI workloads are demanding, but the scale of additional power, cooling, and processing that CIOs will need to provide to support them is hard to comprehend—especially considering the increasingly short turn times companies have come to expect from IT initiatives.

According to Sajjad Moazeni,1 a professor of electrical and computer engineering at the University of Washington, given that ChatGPT receives hundreds of millions of queries daily, it’s likely consuming 1 GWh every day, enough to power 33,000 US homes over that same time period.

Even more worrisome, the decades-long scale-up for enterprise computing has already stretched global electrical supplies to the limit, and the new demands of AI data centers threaten to surpass current capacity. In 2022, data centers accounted for 4% of US energy consumption, and in 2026, they’re projected2 to account for 6%, according to an International Energy Agency (IEA) report.

AMD

GenAI isn’t the only factor putting pressure on CIOs to modernize. Business models, licensing costs, and modern, highly demanding workloads are also concerns. If a CIO is working with older hardware that requires more cores to deliver the desired level of performance, IT could be looking at big increases in costs without changing anything significant in the stack.

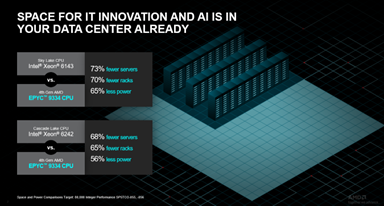

Power and cooling costs continue to rise, and aging data center infrastructure is becoming less reliable and more expensive to maintain. At the same time, demands from the business to support new workloads are growing daily. However, data centers have very little unused space or power drops for deploying additional gear to handle the increase. IT leaders will need to find ways to meet intense resource requirements without expanding their real estate footprint or consuming additional power.

AMD

Data centers must become far more efficient, and AMD EPYC™ CPUs can play a significant role in achieving these goals.

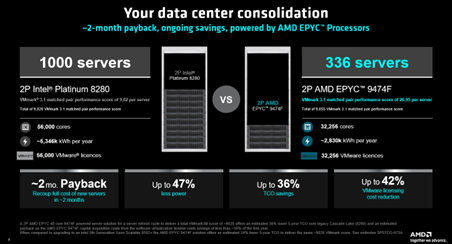

4th Gen AMD EPYC CPUs enable data centers to use fewer servers and less power to do the same amount of work when compared to the competition. For instance, the AMD EPYC 9474F CPU enables data centers to use up to 47% less power and up to a 42% reduction in software licensing cost when compared to legacy Intel® Platinum® 8280 CPU-based servers3. Additionally, with the savings realized from upgrading to AMD EPYC CPUs, a customer can fully recoup the cost of the hardware investment to upgrade in, approximately, two months. The older Intel servers are the type of target highly suited for replacement, given their relative inefficiency, and are often beyond the depreciation cycle.

AMD

The change from older servers to AMD EPYC CPU-based systems can be surprisingly simple. AMD has deep technical relationships across the server ecosystem, from OEM manufacturers and OS vendors to hypervisors and application providers. You can still buy from known and trusted suppliers. The only things CIOs might have to give up are a hefty chunk of their current power bill—and all of their worries about supporting the coming workload onslaught.Learn how AMD can help your organization get ready to say “yes” to AI: https://www.amd.com/en/products/processors/server/epyc/ai.html

1 Q&A: UW researcher discusses just how much energy ChatGPT uses | UW News (washington.1ed2u)

2International Energy Agency (IEA) report

3SP5TCO-073: As of 06/18/2024, this scenario contains many assumptions and estimates and, while based on AMD internal research and best approximations, should be considered an example for information purposes only, and not used as a basis for decision making over actual testing. The Server Refresh & Greenhouse Gas Emissions TCO (total cost of ownership) Estimator Tool compares the selected AMD EPYC™ and Intel® Xeon® CPU based server solutions required to deliver a TOTAL_PERFORMANCE of ~9020 units of VMmark3 matched pair performance based on the published scores (or estimated if indicated by an asterisk) for Intel Xeon and AMD EPYC CPU based servers. This estimation reflects a 5-year time frame.This analysis compares a 2P AMD 48 core EPYC_9474F powered server with a VMmark 3.1 score of 26.95 @ 26 tiles, compared to a 2P Intel Xeon 64 core Platinum_8592+ based server with a VMmark 3.1 score of 27.52 @ 28 tiles, versus legacy 2P Intel Xeon 28 core Platinum_8280 based server with a VMmark 3.1 score of 9.02 @ 9 tiles, Environmental impact estimates made leveraging this data, using the Country / Region specific electricity factors from the ‘2020 Grid Electricity Emissions Factors v1.4 – September 2020’, and the United States Environmental Protection Agency ‘Greenhouse Gas Equivalencies Calculator’. Results generated by: AMD EPYC™ Server Refresh & Greenhouse Gas Emission TCO Estimation Tool – version 1.51 PRO