- Why I recommend this OnePlus phone over the S25 Ultra - especially at this new low price

- I replaced my laptop with Microsoft's 12-inch Surface Pro for weeks - here's my buying advice now

- This palm recognition smart lock doubles as a video doorbell (and has no monthly fees)

- Samsung is giving these Galaxy phones a big One UI upgrade - here's which models qualify

- 7 MagSafe accessories that I recommend every iPhone user should have

The three-way race for GPU dominance in the data center

The modern graphics processing unit (GPU) started out as an accelerator for Windows video games, but over the last 20 years has morphed into an enterprise server processor for high-performance computing and artificial-intelligence applications.

Now GPUs are at the tip of the performance spear used in supercomputing, AI training and inference, drug research, financial modeling, and medical imaging. They have also been applied to more mainstream tasks for situations when CPUs just aren’t fast enough, as in GPU-powered relational databases.

As the demand for GPUs grows, so will the competition among vendors making GPUs for servers, and there are just three: Nvidia, AMD, and (soon) Intel. Intel has tried and failed twice to come up with an alternative to the others’ GPUs but is taking another run at it.

The importance of GPUs in data centers

These three vendors recognize the demand for GPUs in data centers as a growing opportunity. That’s because GPUs are better suited than CPUs for handling many of the calculations required by AI and machine learning in enterprise data centers and hyperscaler networks. CPUs can handle the work; it just takes them longer.

Because GPUs are designed to solve complex mathematical problems in parallel by breaking them into separate tasks that they work on at the same time, they solving them more quickly. To accomplish this, they have multiple cores, many more than the general-purpose CPU. For example, Intel’s Xeon server CPUs have up to 28 cores, while AMD’s Epyc server CPUs have up to 64. By contrast Nvidia’s current GPU generation, Ampere, has 6,912 cores, all operating in parallel to do one thing: math processing, specifically floating-point math.

Performance of GPUs is measured in how many of these floating-point math operations they can perform per second or FLOPS. This number sometimes specifies the standardized floating-point format in use when the measure is made, such as FP64.

So what does the year hold for server GPUs? Quite a bit as it turns out. Nvidia, AMD, and Intel have laid their cards on the table about their immediate plans, and it looks like this will be a stiff competition. Here’s a look at what Nvidia, AMD, and Intel have in store.

Nvidia

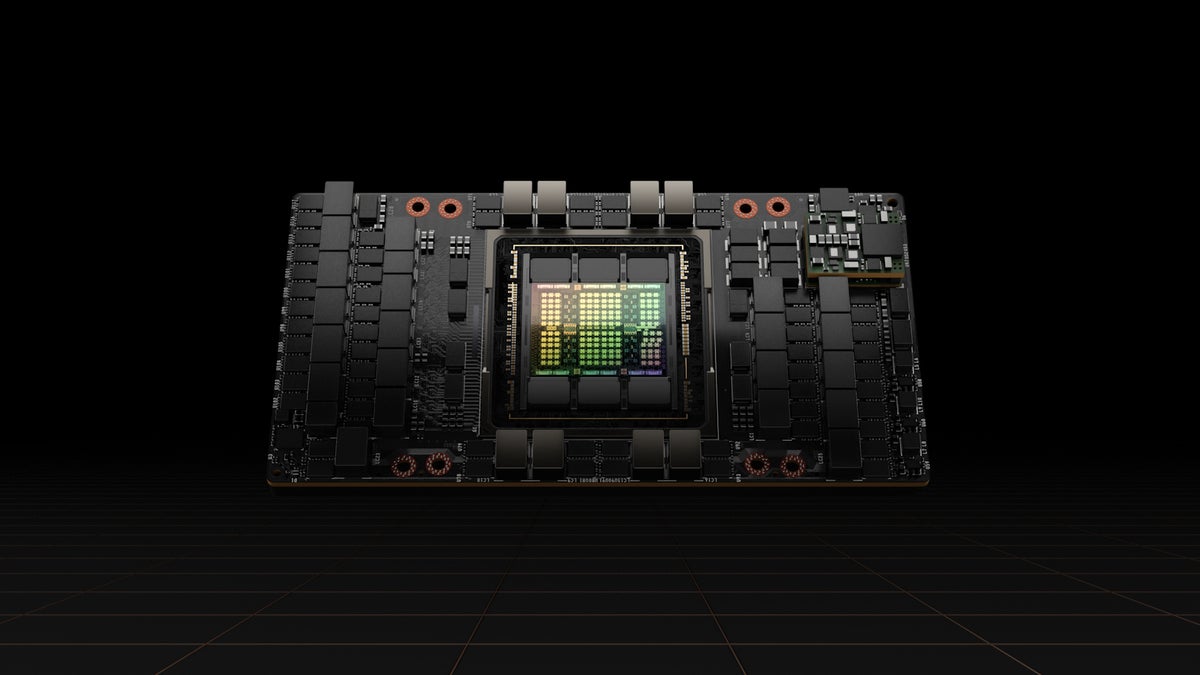

Nvidia laid out its GPU roadmap for the year in March with the announcement of its Hopper GPU architecture, claiming that, depending on use, it can deliver three to six times the performance of its previous architecture, Ampere, which weighs in at 9.7 TFLOPS of FP64. Nvidia says the Hopper H100 will top out at 60TFLOPS of FP64 performance.

Like previous GPUs, the Hopper H100 GPU can operate as a standalone processor running on an add-in PCI Express board in a server. But Nvidia will also pair it with a CPU on a custom Arm processor called Grace that it developed and expects have available in 2023.

For Hopper, Nvidia did more than just amp up the GPU processor. It also modified low-power double data rate (LPDDR) 5 memory—normally used in smart phones—to create LPDDR5X. It supports error-correction code (ECC) and twice the memory bandwidth of traditional DDR5 memory, for 1TBps of throughput.

Along with Hopper, Nvidia announced NVLink 4, its latest GPU-to-GPU interconnect. NVLink 4C2C allows Hopper GPUs to talk to each other directly with a maximum total bandwidth of 900GBs—seven times faster than if they connected through a PCIe Gen5 bus.

“If you think about data-center products, you have three components, and they have to all move forward at the same pace. That’s memory, the processor, and communications,” said Jon Peddie, president of Jon Peddie Research. “And Nvidia has done that with Hopper. Those three technologies don’t move in synchronization, but Nvidia has managed to do it.”

Nvidia plans to ship the Hopper GPU starting in the third quarter of 2022. OEM partners include Atos, BOXX Technologies, Cisco, Dell Technologies, Fujitsu, GIGABYTE, H3C, Hewlett Packard Enterprise, Inspur, Lenovo, Nettrix, and Supermicro.

Due to ongoing supply pressures at its chipmaker TSMC, Nvidia opened the door to possibly working with Intel’s foundry business, but cautioned that such a deal would be years away.

AMD

AMD has the wind at its back. Sales are increasing quarter to quarter, its x86 CPU market share is growing, and in February it completed its acquisition of Xilinx and its field-programmable gate arrays (FPGA), adaptive systems on a chip (SoC), AI engines, and software expertise. It’s expected that AMD will launch its Zen 4 CPU by the end of 2022.

AMD’s new gaming GPUs based on its RDNA 3 architecture are also due out this year. AMD has been tight lipped about RDNA 3 specs, but gaming-enthusiast bloggers have circulated unconfirmed rumors of a 50% to 60% performance gain over RDNA 2.

In the meantime, AMD has begun shipping the Instinct MI250 line of GPU accelerators for enterprise computing, considerably faster than the previous MI100 series. The memory bus has doubled from 4096 bits to 8192 bits, memory bandwidth has more than doubled to 3.2TBps from 1.23TBps, and performance has more than quadrupled from 11.5 TFLOPS of FP64 performance to 47.9TFLOPS. That’s slower than AMD’s Hopper 60TFLOPS, but it’s still competitive.

Daniel Newman, principal analyst with Futurum Research, said AMD’s opportunity to grab market share will come as the AI market grows. And he said he believes that AMD’s success with the CPU market could help its GPU sales. “What AMD has really created over the past five, seven years is a pretty strong loyalty that can possibly carry over,” he said. “The question is, can they grow AI/HPC market share significantly?”

He said the answer could be, “Yes,” because the company has been extremely good at finding market opportunities and managing its supply chain in order to deliver on its goals. And with CEO Lisa Su at the helm, “I find it very difficult to rule out AMD in any area in which they decided to compete at this point,” he said.

Jonathan Cassell, principal analyst for advanced computing, AI, and IoT at Omdia, said he feels AMD’s success with its Epyc server CPUs will provide an opening for the Instinct processor.

“I think that over time, we can see AMD leverage its success over on the data-center microprocessor side and use that is an in to get companies to take a look at [Instinct]. I think we’ll be seeing AMD trying to leverage its relationships with customers to try to expand its presence out there,” he said.

Instinct has been shipping since Q1 2022. So far, its highest profile use case has been with a supercomputer at Oak Ridge National Labs, which packed a lot of performance into a very small space. But the labs are also building an all-AMD exascale supercomputer called Frontier, due for deployment later this year. OEM partners shipping products with Instinct include ASUS, ATOS, Dell Technologies, Gigabyte, Hewlett Packard Enterprise (HPE), Lenovo, Penguin Computing, and Supermicro.

Intel

Intel has long struggled to make anything but basic integrated GPUs for its desktop CPUs. For desktops it has its new Intel Xe line while the server equivalent is known as the Intel Server GPU.

Now the company says it will enter the data-center GPU field this year with a processor code-named Ponte Vecchio that reportedly delivers 45TFLOPS at FP64—almost the same as AMD’s MI250 and 25% behind Nvidia’s Hopper.

“It’s really going to disrupt the environment,” said Peddie. “From what they have told us—and we’ve heard from rumors and other leaks—it’s very scalable.” Ponte Vecchio is due out later this year.

Newman has also heard positive things about Ponte Vecchio, but said the real opportunity for Intel is with its oneAPI software strategy.

oneAPI is a unifying software-development platform the company is working on that is designed to pick the most appropriate type of silicon Intel makes—x86, GPU, FPGA, AI processors—when compiling applications rather than forcing the developer to pick one type of silicon and code to it. It also provides a number of API libraries for functions like video processing, communications, analytics, and neural networks.

This abstraction eliminates the need to determine the best processor to target, as well as the need to work with different tools, libraries, and programming languages. So rather than coding to a specific processor in a specific language, developers can focus on the business logic and write in Data Parallel C++ (DPC++), an open-source variant of C++ designed specifically for data parallelism and heterogeneous programming.

One factor that separates Intel from Nvidia and AMD is where it makes its chips. While the others use Taiwan chip maker TSMC, Intel manufactures many of its own chips in the US, with other factories in Ireland, Malaysia, and Israel. And in has big plans to build more in the US. That gives it certain advantages, Cassell said. “The control [it has] of its own manufacturing gives it a control of its destiny, in a certain way,” he said. “I see these things as assets for the company.”

In the end, said Newman, the competition among Nvidia, AMD, and Intel could come down to a software race. “If you asked [Nvidia’s] top engineers, they’ll say we’re not a chip company. We’re a software company. I really do believe that Intel has not really thought like a software company about AI up to now, but if they can get [oneAPI] right, I see some real opportunity there,” he said.

Copyright © 2022 IDG Communications, Inc.