- AMD’s unpatched chip microcode glitch may require extreme measures by CISOs

- How Cisco leverages AI for impact | FY24 Purpose Report

- Palo Alto Networks firewalls have UEFI flaws, Secure Boot bypasses

- 3 hidden benefits of Dedicated Internet Access for enterprises

- What is Project Stargate? Why this $500-billion AI initiative could herald a 'platform shift'

Using AI Tools to Convert a PDF into Images | Docker

This ongoing Docker Labs GenAI series explores the exciting space of AI developer tools. At Docker, we believe there is a vast scope to explore, openly and without the hype. We will share our explorations and collaborate with the developer community in real time. Although developers have adopted autocomplete tooling like GitHub Copilot and use chat, there is significant potential for AI tools to assist with more specific tasks and interfaces throughout the entire software lifecycle. Therefore, our exploration will be broad. We will be releasing software as open source so you can play, explore, and hack with us, too.

How do you turn a PDF into a set of images? A modern suggestion could be to get Claude or GPT to write you a CLI command for something like ImageMagick. Let’s follow that train of thought.

If we enter a prompt like “How do I turn a PDF into a bunch of images using ImageMagick,” we will indeed get something that looks like it might be a solution.

Imagemagick includes a command-line tool called convert which you can use to convert PDF files into images. Here's how you can do it:

1. Open your terminal

2. Change directory to where your PDF is located:

```

cd /path/to/your/pdf

```

3. Use the following command to convert the PDF into images (in this case JPEG):

```

convert -density 300 -quality 100 your_file.pdf output_name.jpg

```

The agent will then explain how convert works and finish with a suggestion to “please make sure that you have installed ImageMagick before running these commands.”

Instead of worrying about whether ImageMagick is installed, however, let’s use Docker as our tool provider and prompt it to go ahead and run the command. If you’re following along in this series, you’ll have seen that we are using Markdown files to mix together tools and prompts. Here’s the first prompt we tried:

---

tools:

- name: imagemagick

---

# prompt user

Use Imagemagick to convert the family.pdf file into a bunch of jpg images.

After executing this prompt, the LLM generated a tool call, which we executed in the Docker runtime, and it successfully converted family.pdf into nine .jpg files (my family.pdf file had nine pages).

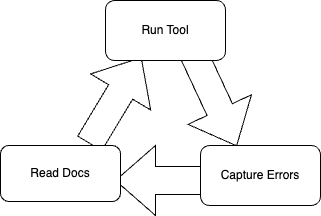

Figure 1 shows the flow from our VSCode Extension.

We have given enough context to the LLM that it is able to plan a call to this ImageMagick binary. And, because this tool is available on Docker Hub, we don’t have to “make sure that ImageMagick is installed.” This would be the equivalent command if you were to use docker run directly:

# family.pdf must be located in your $PWD

docker run --rm -v $PWD:/project --workdir /project vonwig/imageMagick:latest convert -density 300 -quality 300 family.pdf family.jpg

The tool ecosystem

How did this work? The process relied on two things:

- Tool distribution and discovery (pulling tools into Docker Hub for distribution to our Docker Desktop runtime).

- Automatic generation of Agent Tool interfaces.

When we first started this project, we expected that we’d begin with a small set of tools because the interface for each tool would take time to design. We thought we were going to need to bootstrap an ecosystem of tools that had been prepared to be used in these agent workflows.

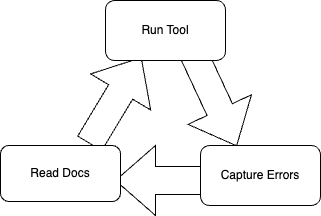

However, we learned that we can use a much more generic approach. Most tools already come with documentation, such as command-line help, examples, and man pages. Instead of treating each tool as something special, we are using an architecture where an agent responds to failures by reading documentation and trying again (Figure 2).

We see a process of experimenting with tools that is not unlike what we, as developers, do on the command line. Try a command line, read a doc, adjust the command line, and try again.

The value of this kind of looping has changed our expectations. Step one is simply pulling the tool into Docker Hub and seeing whether the agent can use it with nothing more than its out-of-the-box documentation. We are also pulling open source software (OSS) tools directly from nixpkgs, which gives us access to tens of thousands of different tools to experiment with.

Docker keeps our runtimes isolated from the host operating system, while the nixpkgs ecosystem and maintainers provide a rich source of OSS tools.

As expected, packaging agents still run into issues that force us to re-plan how tools are packaged. For example, the prompt we showed above might have generated the correct tool call on the first try, but the ImageMagick container failed on the first run with this terrible-looking error message:

function call failed call exited with non-zero code (1): Error: sh: 1: gs: not found

Fortunately, feeding that error back into the LLM resulted in the suggestion that convert needs another tool, called Ghostscript, to run successfully. Our agent was not able to fix this automatically today. However, we adjusted the image build slightly and now the “latest” version of the vonwig/imagemagick:latest no longer has this issue. This is an example of something we only need to learn once.

The LLM figured out convert on its own. But its agency came from the addition of a tool.

Read the Docker Labs GenAI series to see more of what we’ve been working on.