- Apple may rebrand to iOS 26 with the upcoming version - here's why that's a big deal

- LG is still giving away free 27-inch gaming monitors, here's how to get yours

- My favorite portable vinyl player is perfect for summer trips, and it's discounted

- Threat Actors Weaponizing Hardware Devices to Exploit Fortified Enviro

- Cybersecurity Face-Off: CISA and DoD's Zero Trust Frameworks Explained and Compared

What Stands Between IT and Business Success? Data Complexity

By George Trujillo, Principal Data Strategist, DataStax

“Water, water everywhere, and all the boards did shrink;

Water, water everywhere, nor any drop to drink.”

– “The Rime of the Ancient Mariner” by Samuel Taylor Coleridge

Any enterprise data management strategy has to begin with addressing the 800-pound gorilla in the corner: the “innovation gap” that exists between IT and business teams. It’s a common occurrence in all types of enterprises, and it’s difficult to wrestle to the ground. IT teams grapple with an ever-increasing volume, velocity, and variety of data, which pours in from sources like apps and IoT devices. At the same time, business teams can’t access, understand, trust, and work with the data that matters most to them. This scarcity of quality data might feel akin to dying of thirst in the middle of the ocean.

Here, I’ll discuss the most common cause of the innovation gap, and how to bridge it.

Complexity is the enemy of innovation

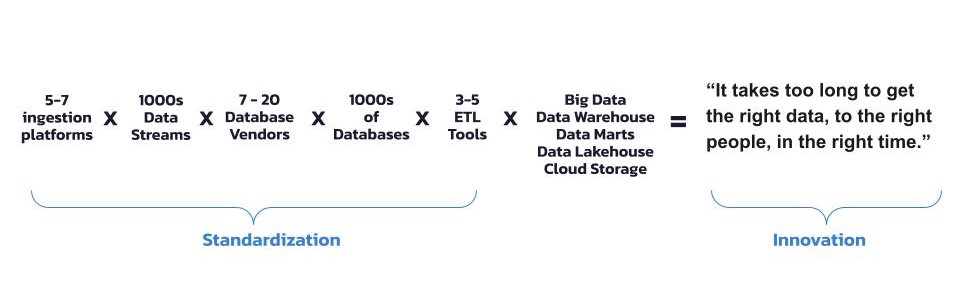

Most organizations (81%) don’t have an enterprise data strategy that enables them to fully capitalize on their data assets, according to Accenture. IT funding might be on the rise, but the ROI for the business from technology investments isn’t as high as it should be. There’s often a big expectation difference between the goal to be data-driven and the business transformation created by data. A real-time data technology stack has to shrink this innovation gap for the business.

DataStax

Analysts and data scientists need flexibility when working with data; experimentation fuels the development of analytics and machine learning models. If data is difficult to work with, experimentation slows down, and consequently, so does innovation. Most companies operate a variety of data ingestion platforms, lots of messaging and queuing data streams, and databases from multiple vendors. Data interoperability across various elements of a data ecosystem determines the effectiveness of delivering business value with data.

DataStax

These disparate systems often create a level of complexity that directly impacts the speed business can deliver insights. It also impacts data security, governance, and change management. (Learn more about how to remedy data complexity in the DataStax eBook, The CIO’s Guide to Shattering Data Silos). Organizations can’t buy or hire their way out of this complexity. A holistic enterprise strategy to architect and align technology solutions toward increased standardization is the best way to hack a way out of this data complexity jungle. Or, to quote MIT’s Jeanne Ross, “Sustained success requires simplifying non-value-adding complexity.”

The wrong way: Siloed data ecosystems

Often, enterprise data ecosystems are built with a mindset that’s too narrow. Many organizations house their data in a variety of “fiefdoms” or silos. More often than not, these piecemeal data architectures crop up over time as organizations, in an attempt to harness the value of their data, invest in a variety of point technology solutions. This might have worked for one team or one project or one application, but the end result of this effort was to lock data in a variety of silos across the organization.

Digital transformation has actually contributed to this complexity. As services and applications became increasingly decoupled and fine-grained (think microservices), they multiplied. And so have the operational databases and abstraction layers that support them. Most big enterprises support dozens of mostly proprietary and highly dislocated NoSQL and SQL databases. That translates into a large number of costly licenses and a lot of operational maintenance resources.

While the notion of looking at data as a collection of silos is obviously outdated, I’ve come across plenty of organizations that have made the mistake of trying to improve data architectures on a “silo-by-silo” basis.

In effect, this creates pockets of individual technologies – and support teams to go along with them – that speak different languages.

DataStax

A holistic ecosystem view

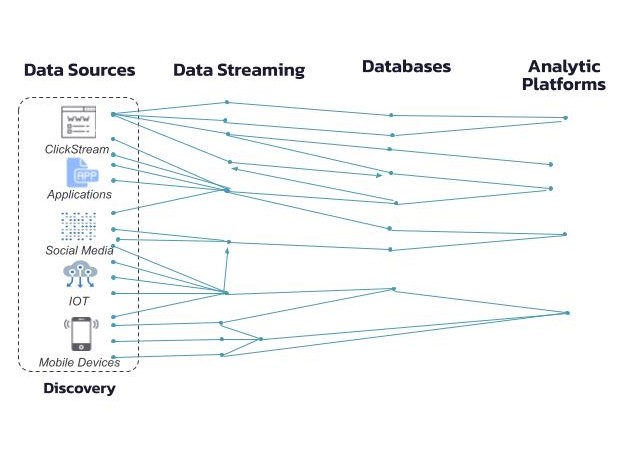

Reducing the number of different “languages” reduces the translation time when integrating data. The goal is to reduce complexity, so changes have a positive compound effect across the entire ecosystem – not in just one particular segment of the architecture. To improve the efficiency of an ecosystem, it’s critical to take a step back and gain a holistic view of the data flow through the ecosystem.

DataStax

Data moves through a data ecosystem via applications, data streams, databases, and analytic platforms. The easier data can flow across the ecosystem, the smoother data integration and correlation for analytics becomes. It’s about getting the right data to the right people at the right time, and that can only happen if an organization reduces complexity. This increases the amount of data that can be used for business insights – and goes a long way to reducing the data scarcity situation.

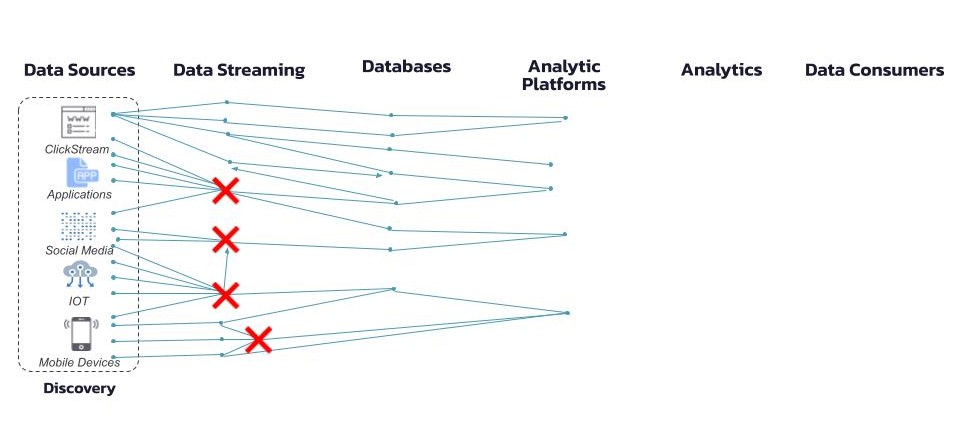

Innovation at integration points

It’s also important to consider where innovation occurs with real-time data; it often happens at data integration points. These data integration points (where, for example, data is combined from clickstreams, loyalty programs, and shipping updates) can become friction points between IT and business. Changes at these integration points that result from a business request for new insights can be difficult and time-consuming to make. When data is streamed from any of a variety of technologies (Apache Kafka, Apache Pulsar, JMS, or Qlik, for example), teams that manage different technologies with different skill sets need to work together. It can take weeks or even months to make changes that result in the business being able to rely on the quality of the data.

DataStax

Data innovation in lines of business

Lines of business understand the data in their domain. They also realize that there’s useful data that resides in other business domains that they want to integrate with. Lines of business need to be empowered to easily access real-time data downstream so they can innovate with it and not be dependent on technology teams.

DataStax

The challenge is that developers working in particular lines of business often don’t have the technical expertise in streaming/queuing technologies. A key way to solve this problem is building a real-time data ecosystem that just works: it makes it easy for developers to innovate with data from anywhere across the organization and goes a long way to accelerating business innovation.

Selecting a real-time data technology stack

Applications, external streaming sources, and databases generate data streams. The more easily all of these can integrate, the faster data changes for insights can be executed. To support standardization and help bridge the innovation gap, messaging and queuing platforms need to handle different types of data sources (mobile, IoT, and databases, for example) and accept data flows from different ingestion platforms.

Similarly, a database needs to support high-velocity data activity and multiple data models. This kind of horizontal data flow alignment – among applications, external data sources, databases, memory caches, dashboards and analytic platforms – is a key element of a modern, real-time data technology stack. Vertical alignment is important too: the ability to easily deploy on premises, in the cloud, across multiple regions, and across multiple clouds.

Remember the goal is to reduce complexity in the number of technology platforms, not increase them. Adding more software to migrate to the cloud, for example, is an anti-pattern to moving with speed and agility. If the applications need to move, so does their ecosystem and the attendant messaging/queues and databases they send data to.

Change the complexity mindset

Any real-time data management strategy has to reduce the complexity mindset, address IT-business friction points, and empower lines of business, analysts, and data scientists with data that is easy to work with. Business strategy emerges from data more easily when a data ecosystem is viewed holistically and dynamically – versus a static and siloed view. A ‘big picture’ view of an organization’s data estate (and a real-time data stack to support it) is how the innovation gap that so often appears between IT and business gets bridged, and how the thirst for accessing data for innovation is satisfied.

Learn more about DataStax here

About George Trujillo:

George Trujillo is principal data strategist at DataStax. Previously, he built high-performance teams for data-value driven initiatives at organizations including Charles Schwab, Overstock, and VMware. George works with CDOs and data executives on the continual evolution of real-time data strategies for their enterprise data ecosystem.